phi4-mini

phi4-mini 参数量

3.8b tools

phi4-mini 模型介绍

phi4-mini

Phi-4-mini-instruct 是一个轻量级开放模型,基于合成数据和经过筛选的公开网站构建,重点关注高质量、推理密集的数据。该模型属于 Phi-4 模型系列,支持 128K 令牌上下文长度。该模型经过了增强过程,结合了监督微调和直接偏好优化,以支持精确的指令遵循和强大的安全措施。

Phi-4-mini-instruct 支持高达 200064 个标记的词汇表大小。标记器文件已经提供了可用于下游微调的占位符标记,但它们也可以扩展到模型的词汇表大小。

phi4-mini 主要应用场景

该模型适用于广泛的多语言商业和研究用途。该模型为需要以下条件的通用 AI 系统和应用提供了用途:

- 内存/计算受限环境

- 延迟受限场景

- 强推理(尤其是数学和逻辑)。

- 该模型旨在加速语言和多模态模型的研究,用作生成式 AI 驱动功能的构建块。

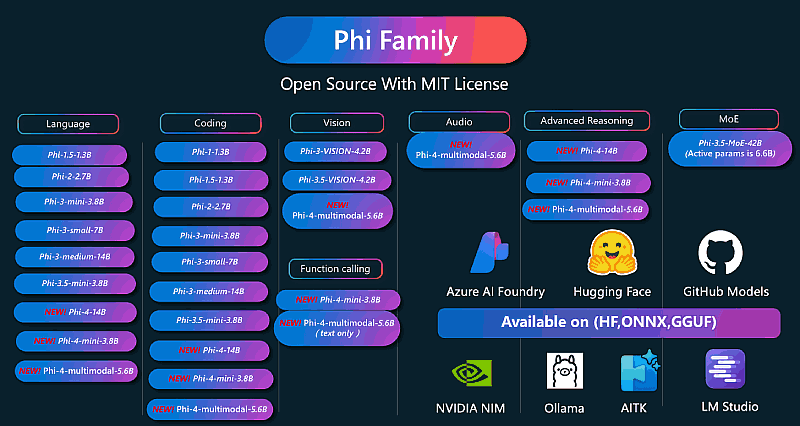

Phi Family

phi4-mini 模型质量

为了解 phi 功能,使用内部基准测试平台将 3.8B 参数 Phi-4-mini-instruct 模型与一系列基于各种基准的模型进行了比较,模型质量的高级概述如下:

| Benchmark | Similar size | 2x size | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Phi-4 mini-Ins | Phi-3.5-mini-Ins | Llama-3.2-3B-Ins | Mistral-3B | Qwen2.5-3B-Ins | Qwen2.5-7B-Ins | Mistral-8B-2410 | Llama-3.1-8B-Ins | Llama-3.1-Tulu-3-8B | Gemma2-9B-Ins | GPT-4o-mini-2024-07-18 | |

| Popular aggregated benchmark | |||||||||||

| Arena Hard | 32.8 | 34.4 | 17.0 | 26.9 | 32.0 | 55.5 | 37.3 | 25.7 | 42.7 | 43.7 | 53.7 |

| BigBench Hard (0-shot, CoT) | 70.4 | 63.1 | 55.4 | 51.2 | 56.2 | 72.4 | 53.3 | 63.4 | 55.5 | 65.7 | 80.4 |

| MMLU (5-shot) | 67.3 | 65.5 | 61.8 | 60.8 | 65.0 | 72.6 | 63.0 | 68.1 | 65.0 | 71.3 | 77.2 |

| MMLU-Pro (0-shot, CoT) | 52.8 | 47.4 | 39.2 | 35.3 | 44.7 | 56.2 | 36.6 | 44.0 | 40.9 | 50.1 | 62.8 |

| Reasoning | |||||||||||

| ARC Challenge (10-shot) | 83.7 | 84.6 | 76.1 | 80.3 | 82.6 | 90.1 | 82.7 | 83.1 | 79.4 | 89.8 | 93.5 |

| BoolQ (2-shot) | 81.2 | 77.7 | 71.4 | 79.4 | 65.4 | 80.0 | 80.5 | 82.8 | 79.3 | 85.7 | 88.7 |

| GPQA (0-shot, CoT) | 25.2 | 26.6 | 24.3 | 24.4 | 23.4 | 30.6 | 26.3 | 26.3 | 29.9 | 39.1 | 41.1 |

| HellaSwag (5-shot) | 69.1 | 72.2 | 77.2 | 74.6 | 74.6 | 80.0 | 73.5 | 72.8 | 80.9 | 87.1 | 88.7 |

| OpenBookQA (10-shot) | 79.2 | 81.2 | 72.6 | 79.8 | 79.3 | 82.6 | 80.2 | 84.8 | 79.8 | 90.0 | 90.0 |

| PIQA (5-shot) | 77.6 | 78.2 | 68.2 | 73.2 | 72.6 | 76.2 | 81.2 | 83.2 | 78.3 | 83.7 | 88.7 |

| Social IQA (5-shot) | 72.5 | 75.1 | 68.3 | 73.9 | 75.3 | 75.3 | 77.6 | 71.8 | 73.4 | 74.7 | 82.9 |

| TruthfulQA (MC2) (10-shot) | 66.4 | 65.2 | 59.2 | 62.9 | 64.3 | 69.4 | 63.0 | 69.2 | 64.1 | 76.6 | 78.2 |

| Winogrande (5-shot) | 67.0 | 72.2 | 53.2 | 59.8 | 63.3 | 71.1 | 63.1 | 64.7 | 65.4 | 74.0 | 76.9 |

| Multilingual | |||||||||||

| Multilingual MMLU (5-shot) | 49.3 | 51.8 | 48.1 | 46.4 | 55.9 | 64.4 | 53.7 | 56.2 | 54.5 | 63.8 | 72.9 |

| MGSM (0-shot, CoT) | 63.9 | 49.6 | 44.6 | 44.6 | 53.5 | 64.5 | 56.7 | 56.7 | 58.6 | 75.1 | 81.7 |

| Math | |||||||||||

| GSM8K (8-shot, CoT) | 88.6 | 76.9 | 75.6 | 80.1 | 80.6 | 88.7 | 81.9 | 82.4 | 84.3 | 84.9 | 91.3 |

| MATH (0-shot, CoT) | 64.0 | 49.8 | 46.7 | 41.8 | 61.7 | 60.4 | 41.6 | 47.6 | 46.1 | 51.3 | 70.2 |

| Overall | 63.5 | 60.5 | 56.2 | 56.9 | 60.1 | 67.9 | 60.2 | 62.3 | 60.9 | 65.0 | 75.5 |

phi4-mini Usage with vLLM

要使用 vLLM 执行推理,可以使用以下代码片段:

from vllm import LLM, SamplingParams

llm = LLM(model="microsoft/Phi-4-mini-instruct", trust_remote_code=True)

messages = [

{"role": "system", "content": "You are a helpful AI assistant."},

{"role": "user", "content": "Can you provide ways to eat combinations of bananas and dragonfruits?"},

{"role": "assistant", "content": "Sure! Here are some ways to eat bananas and dragonfruits together: 1. Banana and dragonfruit smoothie: Blend bananas and dragonfruits together with some milk and honey. 2. Banana and dragonfruit salad: Mix sliced bananas and dragonfruits together with some lemon juice and honey."},

{"role": "user", "content": "What about solving an 2x + 3 = 7 equation?"},

]

sampling_params = SamplingParams(

max_tokens=500,

temperature=0.0,

)

output = llm.chat(messages=messages, sampling_params=sampling_params)

print(output[0].outputs[0].text)

phi4-mini 推理

在获得 Phi-4-mini-instruct 模型 checkpoint 后,用户可以使用此示例代码进行推理:

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer, pipeline

torch.random.manual_seed(0)

model_path = "microsoft/Phi-4-mini-instruct"

model = AutoModelForCausalLM.from_pretrained(

model_path,

device_map="auto",

torch_dtype="auto",

trust_remote_code=True,

)

tokenizer = AutoTokenizer.from_pretrained(model_path)

messages = [

{"role": "system", "content": "You are a helpful AI assistant."},

{"role": "user", "content": "Can you provide ways to eat combinations of bananas and dragonfruits?"},

{"role": "assistant", "content": "Sure! Here are some ways to eat bananas and dragonfruits together: 1. Banana and dragonfruit smoothie: Blend bananas and dragonfruits together with some milk and honey. 2. Banana and dragonfruit salad: Mix sliced bananas and dragonfruits together with some lemon juice and honey."},

{"role": "user", "content": "What about solving an 2x + 3 = 7 equation?"},

]

pipe = pipeline(

"text-generation",

model=model,

tokenizer=tokenizer,

)

generation_args = {

"max_new_tokens": 500,

"return_full_text": False,

"temperature": 0.0,

"do_sample": False,

}

output = pipe(messages, **generation_args)

print(output[0]['generated_text'])

phi4-mini 模型训练

架构:Phi-4-mini-instruct 有 3.8B 个参数,是一个密集的解码器专用 Transformer 模型。与 Phi-3.5-mini 相比,Phi-4-mini-instruct 的主要变化是 200K 词汇量、分组查询注意以及共享输入和输出嵌入。

输入:文本。它最适合使用聊天格式的提示。

上下文长度:128K 个 token

GPU:512 个 A100-80G

训练时间:21 天

训练数据:5T token

输出:响应输入生成的文本

日期:2024 年 11 月至 12 月之间训练

状态:这是一个在离线数据集上训练的静态模型,公开数据的截止日期为 2024 年 6 月。

支持的语言:阿拉伯语、中文、捷克语、丹麦语、荷兰语、英语、芬兰语、法语、德语、希伯来语、匈牙利语、意大利语、日语、韩语、挪威语、波兰语、葡萄牙语、俄语、西班牙语、瑞典语、泰语、土耳其语、乌克兰语

发布日期:2025 年 2 月

AI 扩展阅读:

发表评论

-

alfred

bakllava

codebooga

codellama

codeup

cogito

command-a

command-r7b-arabic

deepcoder

deepseek-r1

devstral

everythinglm

exaone-deep

falcon

firefunction-v2

gemma3

glm4

goliath

granite3.2-vision

granite3.3

llama2

llama2-chinese

llama3-chinese-8b-instruct

llama3.2-vision

llama3.3

llama4

llava

magistral

mathstral

medllama2

mistral

mistral-small3.1

mistrallite

mixtral

moondream

nexusraven

nomic-embed-text

nous-hermes

open-orca-platypus2

openthinker

orca-mini

phi4-mini

phi4-mini-reasoning

phi4-reasoning

phind-codellama

qwen2.5

qwen2.5vl

qwen3

QwQ

reader-lm

sqlcoder

stable-beluga

tulu3

vicuna

wizard-math

wizard-vicuna

wizardcoder

wizardlm

wizardlm-uncensored

zephyr